Upgraded the lab by adding a new server, primarily hosting an NFS server. Upgraded all servers by adding 2 x 10G NICs in all the servers connected via Microtik CRS309 Desktop Router (Using SwitchOS and not RouterOS) – supports LACP. On the server-side decided to bond both the 10G NICs so that better network throughput is achieved when VMs from other servers access the NFS server. It boiled down to simple configurations on the server-side – listed below.

Install ifenslave required for bonding

# apt install ifenslave -y

If ifconfig is not installed on the system, do so

# apt install net-tools -y

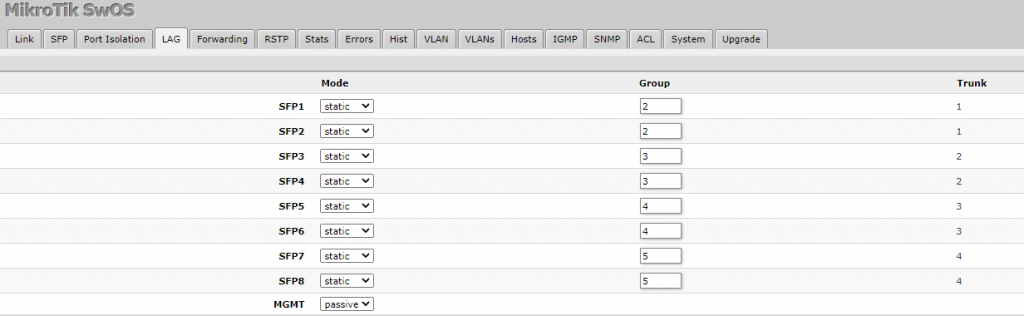

Configure LAG groups on the switch side.

SFPs 1,2 are connected to server 1.

SFPs 3,4 are connected to server 2.

SFPs 5,6 are connected to server 3.

SFPs 7,8 are connected to server 4.

Enable bonding

# modprobe bonding # echo "bonding" >> /etc/modules

Update the /etc/network/interfaces with required bonding configurations.

Contents of /etc/network/interfaces from server 1

# This file describes the network interfaces available on your system # and how to activate them. For more information, see interfaces(5). source /etc/network/interfaces.d/* # The loopback network interface auto lo iface lo inet loopback # The primary network interface auto eth1 iface eth1 inet static address 10.0.0.8/24 gateway 10.0.0.1 # dns-* options are implemented by the resolvconf package, if installed dns-nameservers 10.0.0.1 8.8.4.4 dns-search <<domain>>.net auto eth2 iface eth2 inet static address 10.0.0.9/24 auto eth3 iface eth3 inet static address 10.0.0.10/24 auto eth4 iface eth4 inet static address 10.0.0.11/24 auto eth0 iface eth0 inet manual bond master bond0 post-up /usr/sbin/ifconfig eth0 mtu 9000 aut0 eth5 iface eth5 inet manual bond master bond0 post-up /usr/sbin/ifconfig eth5 mtu 9000 auto bond0 iface bond0 inet static address 10.0.1.1 netmask 255.255.255.0 broadcast 10.0.1.255 post-up /usr/sbin/ifconfig bond0 mtu 9000 slaves eth0 eth5 bond_mode 4 xmit_hash_policy layer2+3 bond-miimon 100 bond-downdelay 200 bond-updelay 200

Note: I had opted for bond-mode 4 (802.3ad aka LACP).

It is not possible to effectively balance a single TCP stream across multiple bonding or teaming devices. If higher speed is required for a single stream, then faster interfaces (and possibly faster network infrastructure) must be used. This theory applies to all TCP streams. The most common occurrences of this issue are seen on high-speed long-lived TCP streams such as NFS, Samba/CIFS, ISCSI, rsync over SSH/SCP, and so on.

My understanding is: Mode 0 (round-robin), Mode 3 (broadcast), Mode 5 (balance-tlb) do not guarantee in-order delivery of TCP streams, as each packet of a stream may be transmitted down a different slave and no switch guarantees that packets received in different switch ports will be delivered in order.

Mode 1 (active-backup), 2 (balance-xor), and 4 (802.3ad) avoid this issue by transmitting traffic for one destination down the same slave or one slave. Mode 2 and Mode 4 balancing algorithms can be altered by configuring the xmit_hash_policy, but they will never balance a single TCP stream down different ports.

In my case, I have opted for the layer2+3 algorithm, which uses XOR of hardware MAC addresses and IP addresses to generate the hash. This algorithm will place all traffic to a particular network peer on the same slave.

Edit: I had attempted to do some config changes and eventually ended up with one more messy configuration as follows

# This file describes the network interfaces available on your system # and how to activate them. For more information, see interfaces(5). source /etc/network/interfaces.d/* # The loopback network interface auto lo iface lo inet loopback # The primary network interface auto eth1 iface eth1 inet static address 10.0.0.8/24 gateway 10.0.0.1 # dns-* options are implemented by the resolvconf package, if installed dns-nameservers 10.0.0.1 dns-search <<domain>>.net auto eth2 iface eth2 inet static address 10.0.0.9/24 auto eth3 iface eth3 inet static address 10.0.0.10/24 auto eth4 iface eth4 inet static address 10.0.0.11/24 auto eth0 iface eth0 inet manual post-up /usr/sbin/ifconfig eth0 mtu 9000 auto eth5 iface eth5 inet manual post-up /usr/sbin/ifconfig eth5 mtu 9000 auto bond0 iface bond0 inet static pre-up modprobe bonding mode=4 miimon=100 pre-up ip link set eth0 down pre-up ip link set eth5 down address 10.0.1.1 netmask 255.255.255.0 broadcast 10.0.1.255 post-up ip link set eth0 master bond0 post-up ip link set eth5 master bond0 post-up /usr/sbin/ifconfig bond0 mtu 9000 bond-slaves none bond-mode 802.3ad bond-xmit_hash_policy layer2+3 bond-miimon 100 bond-downdelay 2000 bond-updelay 2000 bond-lacp-rate 1 post-down ip link set eth0 nomaster post-down ip link set eth5 nomaster post-down /usr/sbin/ifconfig bond0 down post-down rmmod bonding